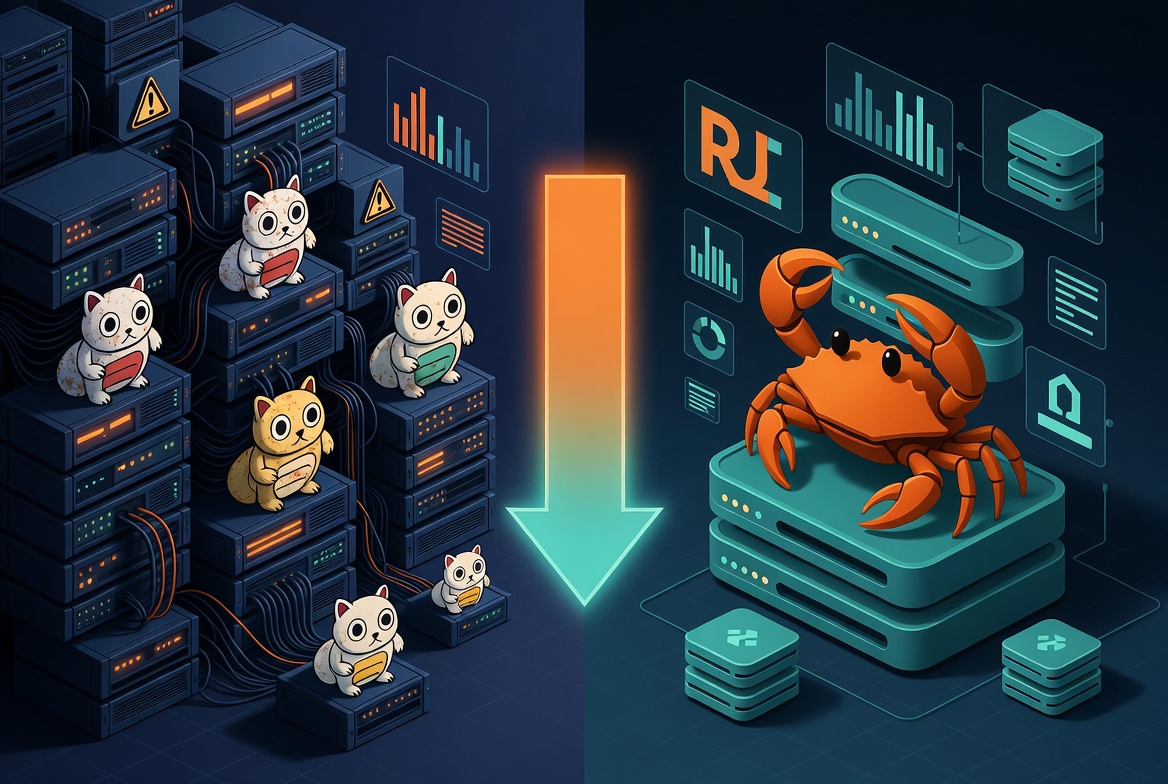

Xiao Li Rewrote Go Monolith to Rust Axum, Company Saved $800K+ Yearly, His Salary Hit $2M

That Unexpected Salary Increase

Last week Xiao Li asked me out for dinner. The guy’s usually tight with money, so if he’s treating, something’s up.

After a few drinks, he finally spilled: “I rewrote our backend from Go to Rust. Saved the company over 800K a year, and they bumped my salary to 2 million.”

I nearly dropped my chopsticks. I know Xiao Li’s a solid developer, but this seemed way too good to be true.

“Don’t get ahead of yourself,” Xiao Li said, downing his beer. “It’s not that simple. Most rewrite projects end up being career trapdoors—slow progress, high risk, endless blame games. I got out unscathed because the conditions happened to align perfectly.”

How Broken Was Their Original System

When Xiao Li took over the Go monolith, it had been running for years. The code wasn’t terrible, not a complete mess. But it was—how do I put it—tired.

“Like that fridge you’ve had for five or six years,” Xiao Li said. “It’s not broken, but it hums louder than before, uses more electricity, and doesn’t cool as well. You say it’s broken? Still works. You say it’s good? Never feels right.”

Check out the numbers:

- Peak capacity: 28,000 requests per second

- About 3 failures per week

- Monthly infrastructure costs: 130K+

- An unspoken “don’t touch that code” culture forming in the team

Most failures weren’t catastrophic outages. They were latency spikes, partial service slowdowns, retry storms. Users noticed slowness but didn’t bother calling support. These are the worst kind of problems—not serious enough to get budget approval for fixes, but draining team energy constantly.

[Client]

|

[Load Balancer]

|

[Go Monolith]

| | |

Auth Search Billing

|

[Main Database]

Everything was挤在一起. Optimize search, gotta worry about affecting billing. Ship a new feature, test the whole system. Scale up, and every module scales together—even though only search needed more resources.

“This architecture isn’t unusable,” Xiao Li said. “But once you hit a certain scale, money starts pouring out. Through microservices architecture transformation, we can achieve finer-grained resource management and more efficient performance optimization.”

That’s why Xiao Li decided on the Go-to-Rust migration.

Those Three Painful Months Learning Rust

Xiao Li didn’t jump into production code right away. The first three months were all self-study at nights and weekends—nothin change to the company’s codebase.

Coming from Go to Rust, some things felt especially awkward. Go’s concurrency model—spin up a goroutine and you’re done, runtime handles scheduling. Not Rust—you gotta explicitly tell it when this task runs, where it runs, and who owns the data.

“At first I thought Rust was just making my life difficult,” Xiao Li said. “After a while, I realized it’s actually pulling out the complexity Go hides in the runtime. Go’s scheduler is excellent, but you can’t see or modify the decisions it makes for you. Rust makes you face those decisions head-on—exhausting, sure, but when things go wrong, you know exactly where to look.”

This Go-to-Rust rewrite process gave Xiao Li a deep appreciation for the differences between the two languages.

A concrete example: In Go, when a goroutine blocks, the runtime automatically switches. You might have no idea where the blocking happened until traffic spikes one day and everything freezes. Rust’s Tokio won’t hide these issues for you—a blocking call will just deadlock the worker thread, and you’ll spot it immediately.

“That ‘won’t hide your problems from you’ design philosophy,” Xiao Li said, “saved us several times later. It’s the key reason this performance optimization succeeded.”

The First Cut: Search Service Only

Xiao Li’s team didn’t go for a big-bang rewrite. First cut was just the search service.

Why search? Several reasons: read-heavy, simple state; clean API boundaries, no other modules affected; most resource-intensive at the time; quick rollback to Go version if things went wrong.

[Client]

|

[Load Balancer]

|

[Go Monolith] ---> [Rust Axum Search Service]

|

[Legacy Database]

Two systems ran in parallel for a month. Traffic mirroring compared results, ensuring the Rust version returned identical data to the Go version.

The result surprised even Xiao Li: search throughput jumped from 28K to 120K requests per second—about a 4.7x improvement, with more stable P99 latency under high load.

But Xiao Li was honest about it: “Those numbers have水分. The new system had more aggressive caching, shorter request paths, and a data model optimized for search. Can’t simply say Rust is 4.7x faster than Go.”

Regardless, this microservices architecture transformation delivered significant performance optimization gains.

What really thrilled him wasn’t the speed—it was the stability. Before, traffic peaks would trigger retry storms, then the whole system would shake. After switching to Rust, the search service under pressure was特别 boring. Just ran steadily, no drama.

“Boring,” Xiao Li said, “is the highest compliment in backend engineering.”

A Year of Gradual Refactoring

After three months of stable search service operation, leadership started asking: should we migrate the rest?

What Xiao Li’s team did for the next year can be summed up in one sentence: always keep both legs running.

Stage 1: [Client] -> [Load Balancer] -> [Go Monolith]

Stage 2: [Client] -> [Load Balancer] -> [Go Mononym] -> [Rust Microservices]

Stage 3: [Client] -> [Load Balancer] -> [Rust Microservices] -> [Go Residuals]

Through this gradual Go-to-Rust migration strategy, they successfully built a microservices architecture, achieving significant cost optimization.

“Istio was huge in this process,” Xiao Li said. “We could shift traffic by percentage, rollback at the mesh layer if something went wrong—no code redeployment needed.”

OpenTelemetry was another hero. They had tracing in Go too, but Rust spans have a characteristic—their lifecycle binds to code structure. Resource leaks, blocking calls, request fanouts are all visible at a glance. Problem-solving changed from being detective work to reading a map.

This observability changed how they handled failures. Before: “Something’s wrong again, what are the symptoms?” Now: “This spot on the graph is anomalous, check if it’s that logic.”

The Final Numbers

After completing the migration:

| Metric | Go Monolith | Rust Microservices |

|---|---|---|

| Peak Throughput | 28K RPS | 187K RPS |

| Monthly Infrastructure | 130K | 30K |

| Annual Savings | - | ~800K |

| Production Failures | ~3/week | 14 months zero |

Dropping from 130K to 30K means an 800K difference annually. But this isn’t just “Rust is fast”—it’s the result of several factors叠加: predictable async scheduling, no hidden runtime overhead; Axum middleware supports explicit backpressure control; services broken down for better fault isolation; cache hit rate up, retries down, achieving significant performance optimization and cost optimization.

There were costs too. Compilation time got longer. Hiring Rust developers is harder than hiring Go engineers. New team members need more systems programming background. Some internal tools they left as-is, continued using Go, because rewriting them wasn’t worth it.

Why This Rewrite Turned Into a Salary Increase

“The raise isn’t because Rust is amazing,” Xiao Li said. “It’s because this rewrite changed a few things that really matter to the company.”

The 800K annual cost savings is auditable—finance can see it directly on the bills. The team previously exhausted by three weekly failures now has that human energy释放. New product features don’t come with “might bring down the backend” anxiety. Capacity planning meetings changed from “how many machines to add” to “current resources will last a year.”

“Most rewrites stop at ‘it runs,’” Xiao Li said. “This rewrite changed the company’s cost structure and operational rhythm. That’s what leadership notices. The raise isn’t a reward—it’s the company revaluing my economic contribution.”

Things He Wouldn’t Do Again

Xiao Li said if he could do it over, some things he’d handle differently.

First, he underestimated the social costs of rewriting. Team members worried about being sidelined, felt their code was being invalidated. These emotions needed addressing, but he was too focused on the tech side.

Second, Rust isn’t suitable for all scenarios. Admin interfaces, low-frequency batch tasks—he wouldn’t touch those now. Rewriting them had too little reward for the risk. This rewrite experience taught him that choosing the right scenarios matters more than the technology itself.

Third, no service mesh and distributed tracing, don’t start the Go-to-Rust migration. They achieved zero-downtime switches thanks to Istio and OpenTelemetry. Without this infrastructure, “smooth migration” is just empty words.

Should You Do It

“I asked Xiao Li before leaving: so should our company try this too?” I recalled the dinner scene.

He asked me back: “Does it hurt now?”

If your system runs stably, costs are acceptable, and the team isn’t exhausted, then rewriting is creating problems, not solving them. Not all systems need rewriting—most actually don’t.

Go-to-Rust migration like this makes sense only when the business feels the pain and is willing to shoulder a new risk to eliminate the old one, while expecting real returns through this performance optimization and cost optimization.

“Our situation happened to fit these conditions,” Xiao Li said. “What about yours?”

Found this article useful?

If your company is considering a tech stack migration, or you’re interested in real production comparisons between Rust and Go, feel free to like and share.

Questions or thoughts? Let’s discuss in the comments. What kind of technical debt has your team encountered? How did you finally handle it?

Follow Monster Code, and next we’ll dive into several pitfalls in Rust async programming and how to debug them with Tokio Console.