You Only Have Three Servers, Why Are You Using Kubernetes?

Table of Contents

Interviews ask you to design aircraft carriers, then you get hired to tighten screws. We’ve all heard this cliché in our industry, but have you noticed? Now even “tightening screws” requires memorizing an aircraft carrier manual first.

Open any Java backend interview question bank, and K8s boilerplate has become standard:

- What’s the Pod lifecycle?

- How many types of Services are there?

- What’s the difference between Deployment and StatefulSet?

- How do you implement sidecar pattern?

You recite it perfectly, the interviewer nods approvingly, and you get the offer.

Then what? You excitedly log into your company’s servers, only to find three machines total, running a systemd service, deployed by SSHing in and running git pull.

All that HPA auto-scaling, Istio service mesh, Helm Chart orchestration you carefully prepared? Useless. Not because the company is behind, but because they’re清醒.

I had a friend who wasn’t so sober. Startup just got angel funding, product hasn’t launched yet, users have been pestering them in the group chat for months, and he goes off to learn Kubernetes.

Three whole months, soaking in YAML files every day, debugging network policies, studying service mesh, feeling totally Google.

So I asked him: How many users do you have?

He said still in beta, maybe a few dozen.

I asked how many servers?

He said two, still debating whether to add a third.

I asked what your current server deployment setup is?

He said SSH in and git pull.

I almost spit out my coffee.

Two servers, why on earth are you using Kubernetes? What’s this like? It’s like there’s just two people in your household, but you insist on buying a fifty-person bus to commute to work. Every morning you hoist your wife into the driver’s seat, hop in the back, drive this empty monstrosity to work, then hunt for a bus-sized parking spot, spending half an hour backing in.

Great vehicle, but really unnecessary.

Kubernetes comes from Greek, meaning “helmsman.” When Google open-sourced it, the world went crazy: this is Google’s secret to managing hundreds of thousands of servers, we need it too.

But the question is, how many servers do you have?

What’s the real situation for most startups?

- One main server running the app

- One hosted database for Postgres or MySQL

- Add a Redis cache, done

Background jobs? A systemd timer plus a bash script handles it.

Backups? Cron job runs at 3 AM every day.

Just that simple.

Let me show you a real systemd config that’s been running in production for years:

[Unit]

Description=Application Server

After=network.target

[Service]

User=app

WorkingDirectory=/app

ExecStart=/app/bin/server

Restart=on-failure

RestartSec=10

StandardOutput=journal

StandardError=journal

[Install]

WantedBy=multi-user.target

That’s it. Twenty lines of config, you can understand every single one.

Something breaks at 2 AM, no need to check docs, no need for Stack Overflow, one glance and you know what’s wrong.

Same thing in K8s?

- Deployment

- Service

- ConfigMap

- Secret

- Ingress

That’s at least five or six files, each with dozens of lines of YAML. And this is just a basic single-service app, not counting monitoring, logging, network policies, and all that fancy stuff.

When my friend was learning K8s, I asked him why. His answer was classic: because everyone else is using it. That sentence has destroyed more startups than bad ideas ever have. Our industry has a problem, always thinking we’re at Netflix, at Google, at Meta, reading their tech blogs daily, watching their architecture talks, then thinking we need to do the same. What’s this called? “Optimization Theater” - great stage effects, but completely useless.

Most early teams aren’t missing container orchestration, they’re missing focus. What do your team meetings discuss? K8s or Swarm, managed or self-hosted, Helm or native manifests. Technology choice discussions week after week, while the product? User feedback? All being discussed as “when we have time.”

The problem was never orchestration, the problem is three servers are enough for half a year, becomes five, then ten, this is linear growth, not some distributed systems research project, systemd says it can totally handle this load.

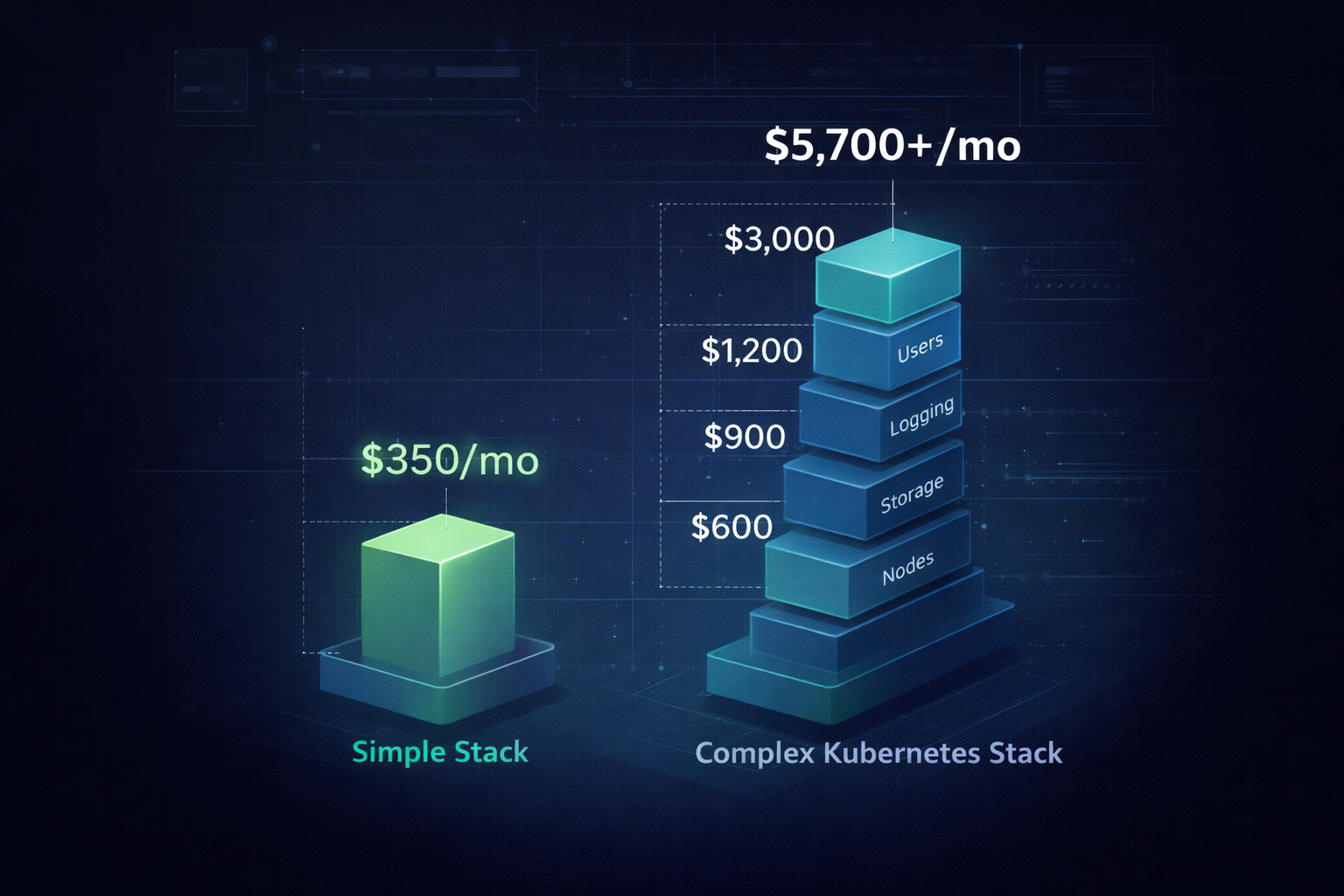

Let’s do the math. One t3.large server, about $100 a month, hosted Postgres with backups $200, monitoring and logging $50. $350 a month, this setup handles millions of requests.

K8s? Managed version charges you for the control plane first, then worker nodes, then storage, then load balancers, then the salary of that engineer who has to get up at 3 AM to fix a failed probe.

The real cost isn’t money, it’s time.

A junior developer can figure out systemd in a day, small teams don’t need any K8s experts. But with Kubernetes, even if nothing breaks, someone still needs to watch it constantly.

Every month spent learning infrastructure you don’t need yet could have been spent learning something from your users.

Why do we prefer complexity? The reason is simple: fear. Fear that simple solutions won’t be enough later, fear that you’re missing something important, fear that real engineers use complex systems and using simple ones makes you look amateur. This fear is understandable, but wrong. The most impressive engineers I’ve seen run systems so simple they’d shock you. One friend makes six figures a month with a single VPS and a few scripts. Another runs an entire trading platform on hosted services and systemd. They don’t know K8s? They know it very well, they just don’t use it. Not because they don’t understand, but because they do.

When something breaks, you need to know. systemd gives it to you straight - one line journalctl -u app.service --since today, CPU high, memory blew up, disk full, the failure is physical, understandable, just fix it. K8s failures are abstract, Pod unhealthy, Node ready but unavailable, Service exists but routes nowhere, debugging becomes an archaeological dig, layer by layer, only to discover some selector had a typo.

Of course there’s a turning point. When a single machine really can’t handle it, when you deploy dozens of times a day, when fifty engineers push code simultaneously, when you need algorithms to decide where to put servers, that’s when K8s is a gift, not a burden. And there’s something no one wants to say out loud: by the time you reach that stage, you have money, you have time, you have a real business worth this complexity, migration is hard, but worth it. Migrating early? That’s pure suffering.

The tools that survive are those you can understand, can teach, and can take abuse. Nginx, Postgres, MySQL, Bash, Systemd - these tools aren’t cool, they don’t brag, but they fail predictably and age gracefully. Infrastructure should be invisible; if it takes too much of your attention, it’s already too expensive.

Three things. First, K8s is powerful, systemd is enough, power isn’t free, enough is underestimated. Second, if your goal is to build products, ship features, sleep soundly, choose boring, not because it’s simple, but because it’s honest. Third, that founder who spent three months learning K8s could have used that time to get ten thousand users. Don’t be that founder.

Remember: enough is the highest form of complexity.

Your Cheat Sheet

# Check service status

systemctl status app.service

# Start stop restart

systemctl start app.service

systemctl stop app.service

systemctl restart app.service

# View logs

journalctl -u app.service --since today

journalctl -u app.service -f # follow mode

# Enable on boot

systemctl enable app.service

# List timers (cron-style)

systemctl list-timers

I Want to Hear Your Story

Tell me: What deployment setup are you using now? Single machine bare metal? Docker compose? Or already aboard the K8s ship? Share your choice and pitfalls in the comments.

Next up: When you should really use K8s - I’ll give you three hard metrics, no rush to switch until you hit them.

Found This Article Helpful?

- Like: If this helped, give it a like to help more people see it

- Share: Share with friends who might be debating whether to use K8s

- Follow: Follow Dream Beast Programming so you don’t miss more practical tech articles

- Comment: Have questions or thoughts? Welcome to discuss in the comments

Your support is my biggest motivation to keep creating!