Performance Optimization

17 posts

Homebrew Too Slow? Try This Rust Version, 20x Faster

ZeroBrew is a Rust-based Homebrew alternative that installs packages lightning fast. Let’s talk about why it’s so fast and how to safely try it out.

Const Generics: This Rust Feature Cut Our Code by 85%

From 8,347 lines to 1,243 lines — the rebirth of a cryptography library

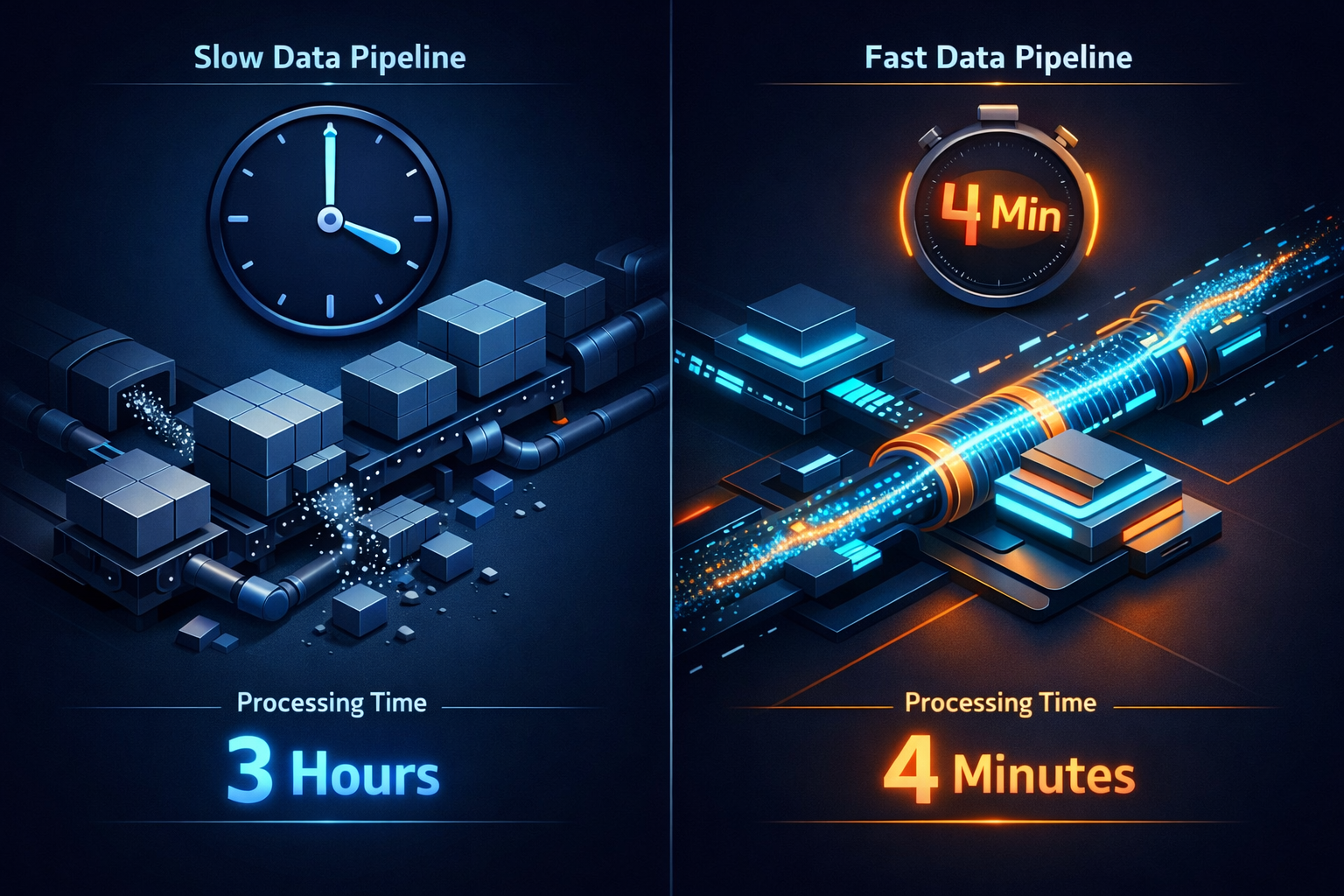

I Rewrote Our Python Data Pipeline in Rust: 3 Hours to 4 Minutes, 40x Faster

A real-world case study of rewriting a Python data pipeline with Rust + DuckDB. From 3-hour daily ETL jobs to 4-minute runs, memory usage dropped from 12GB to 600MB, and server costs cut by two-thirds.

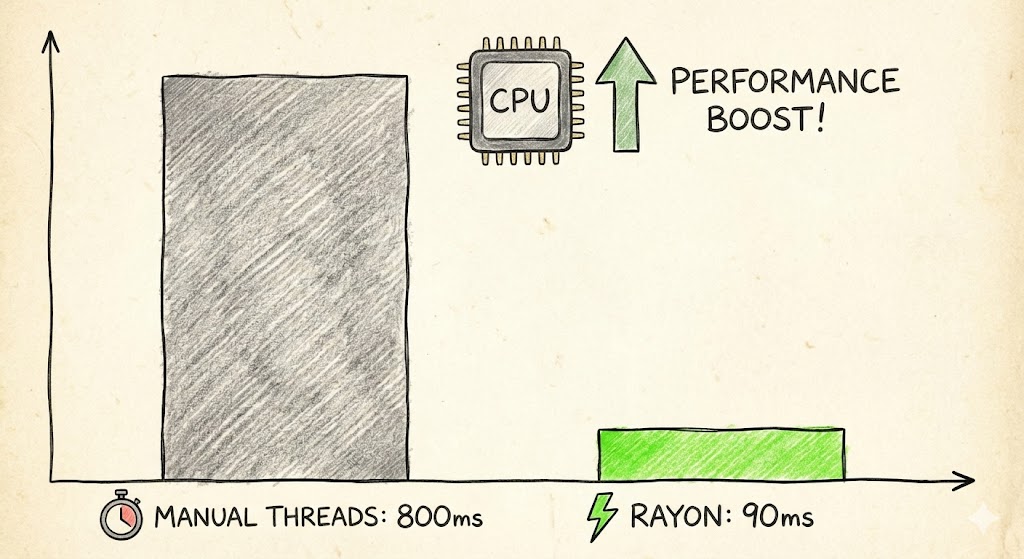

From 800ms to 90ms: How Rust Rayon Saved My Multithreading Nightmare

A real Rust concurrency case study: replacing manual threads with Rayon’s work-stealing scheduler and getting a 9x speedup.

Rust mmap Memory-Mapped I/O: Why Reading Files Can Be as Fast as Accessing Memory

A plain-English guide to Rust mmap memory-mapped I/O. Why is it 5-10x faster than traditional Rust file reading? How does zero-copy achieve Rust performance optimization? This memmap2 hands-on tutorial uses real-life analogies to help you understand this seemingly mysterious low-level technology.

Evan You Announces OXC Tools: 45x Faster Than Prettier, 50-100x Faster Than ESLint

Vue.js creator Evan You unveils OXC tool suite with oxfmt 45x faster than Prettier and oxlint 50-100x faster than ESLint, revolutionizing frontend development workflow

Rust Makes Qwen LLM Models Blazing Fast Again: 6x Speed Tokenizer Black Magic

bpe-qwen: BPE tokenization core rewritten in Rust for Qwen models, tested at 6x–12x speedup with HuggingFace API compatibility. One-line replacement to accelerate your inference pipeline.

When My Fitness App Was Lagging Like a Slideshow, Rust Came to the Rescue

React Native makes cross-platform app development easy, but complex calculations can be a headache? Here’s how I used Rust to solve performance bottlenecks

Rust 1.90: The Update Developers Have Been Begging For

If Rust build times have ever ruined your coding flow, this release fixes what matters most

CPU-GPU Overlap Inference Starter Guide: Cut 30% Wait Time with Python

Clarify the CPU/GPU split in PyTorch inference and walk through overlapping techniques that slash latency.