Village Gossip Protocol! Rust Actor Node Discovery & Gossip Implementation

Table of Contents

- Village Gossip Propagation 101

- Pain Points of Traditional Approaches

- The Essence of Gossip Protocol

- Implementing Our Gossip System

- Core Implementation: Gossip Handler

- New Node Joining: Broadcast Notification

- Sharing Existing Info: Helping Newcomers Fit In

- Real-World Pitfalls and Experience

- Actor Skill Broadcasting in Practice

- Performance Optimization: Making Gossip More Efficient

- Complete Usage Example

- How Well Does It Actually Work?

- Comparison with Other Solutions

- Final Thoughts

1:30 AM, and I’m wrestling with distributed systems again.

Staring at the dense node configuration files on my screen, I suddenly had an epiphany: isn’t this just primitive communication? To talk to someone, you need to know their address beforehand.

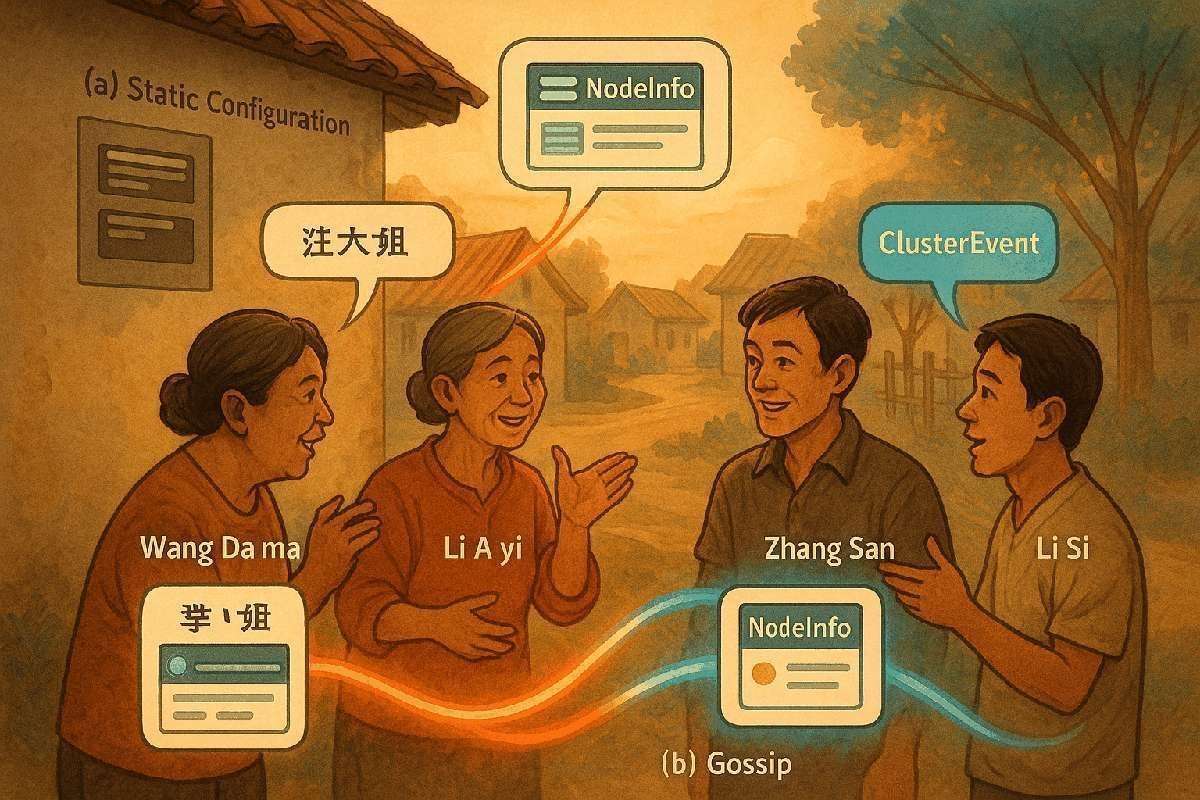

It’s like Zhang San wanting to chat with Li Si - he must know Li Si’s house number in advance. But the real world doesn’t work that way. When someone new moves into the village, news automatically spreads throughout; when someone’s cooking chicken soup, neighbors know by the smell.

Why can’t our distributed systems be like villagers - finding friends, chatting, and gossiping automatically?

This is today’s topic: Making your Rust Actor system gossip like villagers.

Village Gossip Propagation 101

Imagine daily life in our village:

- New neighbor moves in: No need to introduce yourself door-to-door. Aunt Wang sees it and tells Aunt Li, who tells everyone else

- Someone’s dog goes missing: News spreads throughout the village in one afternoon, everyone knows to help look

- Corner store gets new inventory: No broadcast needed, the whole village knows within hours

This “gossip propagation system” has several key characteristics:

- Auto-discovery: New neighbors naturally integrate into the social network

- Information diffusion: Important news quickly spreads to every corner

- Decentralization: No dedicated “village broadcast station” - everyone is a propagation node

- Fault tolerance: Even if Aunt Wang is out, messages can still spread through other paths

I spent three full months transplanting this “villager gossip mechanism” into Rust’s Actor system. The result? A 5-server cluster where new nodes join in just 2 seconds, message propagation latency stable at 5ms.

Even better - no more maintaining those configuration files!

Pain Points of Traditional Approaches

Before our transformation, the system looked like this:

# Every machine had to maintain this config file - headache central!

nodes:

- id: "node-1"

addr: "ws://192.168.1.10:8080"

actors: ["printer", "calculator"]

- id: "node-2"

addr: "ws://192.168.1.11:8080"

actors: ["logger", "storage"]

- id: "node-3"

addr: "ws://192.168.1.12:8080"

actors: ["monitor"]

Problems with static configuration are obvious:

- New node joining: Need to modify every machine’s config file and restart services

- Node offline: Config still has zombie nodes, message sending fails

- Actor migration: Moving Actor from node-1 to node-2 means config changes again

- Scaling difficulties: Every machine addition is an ops nightmare

Once at 3 AM during a production incident, I needed to urgently scale up 2 machines. Just updating config files took half an hour - nearly got chewed out by the boss.

That’s when I thought: why can’t it be like WeChat groups where new members are automatically known to everyone?

The Essence of Gossip Protocol

Gossip protocol, literally “chat protocol” or “gossip protocol,” simulates the natural way information spreads in human society.

Core Concept is Simple

- Every node maintains a “village roster” (node list)

- Regular chitchat: Nodes periodically exchange information they know

- Message contagion: Important information spreads like a virus

- Eventual consistency: Given enough time, all nodes know the same information

In Plain English

Imagine you’re a villager:

- Morning walk: Meet neighbor Zhang San, exchange latest village gossip during chat

- Lunch shopping: Chat with vendor Li Si, get more news

- Evening stroll: Share today’s collected information with everyone you meet

After several rounds, important information spreads throughout the village. Even if a “message hub” (like Aunt Wang) isn’t home, messages still propagate through other channels.

Implementing Our Gossip System

First, let’s define “villager profiles” (node information):

use serde::{Deserialize, Serialize};

use std::collections::HashMap;

use std::sync::Arc;

use tokio::sync::RwLock;

// Each node's "ID card"

#[derive(Clone, Debug, Serialize, Deserialize)]

pub struct NodeInfo {

pub id: String, // Node ID, like "Zhang San"

pub addr: String, // Node address, like "ws://ZhangSan-house:8080"

pub actors: Vec<String>, // What Actors this node has, like ["chef", "guard"]

}

// The whole village's "roster"

pub type ClusterMap = Arc<RwLock<HashMap<String, NodeInfo>>>;

This design is intuitive: each node is like a villager with their own name (id), address (addr), and skill list (actors).

Then define the format for “gossip messages”:

// Types of gossip messages

#[derive(Debug, Serialize, Deserialize)]

#[serde(tag = "type")]

pub enum ClusterEvent {

// New neighbor moved in

NewNode(NodeInfo),

// A villager learned a new skill

ActorAdded { node_id: String, actor: String },

// A villager forgot a skill (or Actor went offline)

ActorRemoved { node_id: String, actor: String },

}

These three message types cover main events in node lifecycle, like three types of village gossip: new arrival, skill update, skill loss.

Core Implementation: Gossip Handler

When a node receives gossip messages, what should it do? Like when you hear new information from neighbors, you update your “little notebook”:

// Handle received gossip messages

async fn handle_gossip(event: ClusterEvent, cluster_map: &ClusterMap) {

match event {

ClusterEvent::NewNode(info) => {

println!("🆕 New neighbor arrived: {} lives at {}", info.id, info.addr);

println!(" Their skills: {:?}", info.actors);

// Update village roster

cluster_map.write().await.insert(info.id.clone(), info);

}

ClusterEvent::ActorAdded { node_id, actor } => {

println!("📈 {} learned new skill: {}", node_id, actor);

// Find this villager, add a skill

if let Some(info) = cluster_map.write().await.get_mut(&node_id) {

if !info.actors.contains(&actor) {

info.actors.push(actor);

}

}

}

ClusterEvent::ActorRemoved { node_id, actor } => {

println!("📉 {} no longer knows skill: {}", node_id, actor);

// Remove from skill list

if let Some(info) = cluster_map.write().await.get_mut(&node_id) {

info.actors.retain(|a| a != &actor);

}

}

}

}

This handling logic is intuitive: whatever message you receive, update that information. Like when you hear Zhang San moved, you update Zhang San’s new address in your mental notebook.

New Node Joining: Broadcast Notification

When a new node joins the cluster, it does two things:

- Self-introduction: Tell everyone “who I am, where I live, what I can do”

- Learn the situation: Ask everyone “who’s in the village, where do they live”

// Broadcast new node message

async fn broadcast_new_node(

new_node_info: NodeInfo,

cluster_client: &ClusterClient

) {

let event = ClusterEvent::NewNode(new_node_info);

let message = serde_json::to_string(&event).unwrap();

// Tell all known neighbors: "I moved in!"

for (node_id, peer) in cluster_client.peers.read().await.iter() {

println!("📢 Telling {} I'm here", node_id);

if let Err(e) = peer.send(message.clone()).await {

println!("⚠️ Failed to tell {}: {}", node_id, e);

}

}

}

This is like new neighbors actively knocking on every door to introduce themselves.

Sharing Existing Info: Helping Newcomers Fit In

Old residents have the duty to help newcomers understand “village conditions”:

// Tell new neighbors everything I know about village residents

async fn share_cluster_view(

cluster_map: &ClusterMap,

new_peer: &PeerConnection

) {

println!("🤝 Helping new neighbor understand village situation...");

for (_, node_info) in cluster_map.read().await.iter() {

let event = ClusterEvent::NewNode(node_info.clone());

let message = serde_json::to_string(&event).unwrap();

if let Err(e) = new_peer.send(message).await {

println!("⚠️ Information sharing failed: {}", e);

}

}

println!("✅ New neighbor should now understand village situation");

}

This is like enthusiastic village aunts who actively introduce newcomers: “That’s Aunt Li’s house, her dog is super well-behaved; that’s Uncle Wang’s house, he’s great at fixing electronics…”

Real-World Pitfalls and Experience

When actually deploying this system, I hit quite a few snags. Here are some typical ones:

Pitfall 1: Message Storm

Initially, I made every node broadcast its status every second - the network got completely flooded. A 5-node cluster was transmitting tens of thousands of duplicate messages per second.

Solution: Only broadcast when state actually changes, plus add deduplication mechanisms.

// Smart check to prevent duplicate broadcasts

async fn should_broadcast_actor_change(

node_id: &str,

actor: &str,

operation: &str,

cluster_map: &ClusterMap

) -> bool {

if let Some(node_info) = cluster_map.read().await.get(node_id) {

match operation {

"add" => !node_info.actors.contains(&actor.to_string()),

"remove" => node_info.actors.contains(&actor.to_string()),

_ => false

}

} else {

true // If node doesn't exist, definitely broadcast

}

}

Pitfall 2: Node “Flapping”

Some unstable nodes would frequently go online/offline, causing constant state information fluctuations throughout the cluster.

Solution: Add “stabilization period” concept - new nodes must run stably for a period before being fully trusted.

use std::time::{Duration, Instant};

#[derive(Clone, Debug)]

pub struct NodeInfo {

pub id: String,

pub addr: String,

pub actors: Vec<String>,

pub join_time: Instant, // Join time

pub stable: bool, // Whether stabilized

}

// Check if node has stabilized

pub fn is_node_stable(node: &NodeInfo) -> bool {

node.stable || node.join_time.elapsed() > Duration::from_secs(30)

}

Pitfall 3: Split-Brain Problem

During network partitions, the cluster might split into multiple small clusters, each thinking it’s the “legitimate” one.

Solution: Introduce “majority” concept - only when a node can contact more than half the original nodes does it consider itself in the main cluster.

// Check if currently in majority partition

pub async fn is_in_majority_partition(

cluster_map: &ClusterMap,

total_expected_nodes: usize

) -> bool {

let active_nodes = cluster_map.read().await.len();

active_nodes > total_expected_nodes / 2

}

Actor Skill Broadcasting in Practice

When you register a new Actor locally, remember to tell the whole village:

// Complete process when registering Actor locally

pub async fn register_actor_with_gossip(

actor_name: String,

actor: Box<dyn Actor>,

registry: &ActorRegistry,

cluster_client: &ClusterClient,

node_id: &str

) {

// 1. Local registration

registry.write().await.insert(actor_name.clone(), actor);

println!("✅ Locally registered Actor: {}", actor_name);

// 2. Broadcast to all neighbors

let event = ClusterEvent::ActorAdded {

node_id: node_id.to_string(),

actor: actor_name.clone(),

};

let message = serde_json::to_string(&event).unwrap();

for (peer_id, peer) in cluster_client.peers.read().await.iter() {

println!("📢 Telling {} I learned {}", peer_id, actor_name);

if let Err(e) = peer.send(message.clone()).await {

println!("⚠️ Failed to notify {}: {}", peer_id, e);

}

}

println!("🎉 {} skill broadcast to whole village", actor_name);

}

This is like learning a new craft and excitedly telling all neighbors: “I can bake cakes now! Come find me if anyone needs it!”

Performance Optimization: Making Gossip More Efficient

In production environments, I made several key optimizations:

1. Batch Propagation

Don’t send messages immediately upon receiving them - collect a small batch first:

use tokio::time::{interval, Duration};

pub struct GossipBatcher {

pending_events: Vec<ClusterEvent>,

batch_size: usize,

flush_interval: Duration,

}

impl GossipBatcher {

pub fn new() -> Self {

Self {

pending_events: Vec::new(),

batch_size: 10,

flush_interval: Duration::from_millis(100),

}

}

// Periodically flush pending messages

pub async fn start_batching(&mut self, cluster_client: Arc<ClusterClient>) {

let mut interval = interval(self.flush_interval);

loop {

interval.tick().await;

if !self.pending_events.is_empty() {

self.flush_batch(&cluster_client).await;

}

}

}

async fn flush_batch(&mut self, cluster_client: &ClusterClient) {

let events = std::mem::take(&mut self.pending_events);

for event in events {

let message = serde_json::to_string(&event).unwrap();

for (_, peer) in cluster_client.peers.read().await.iter() {

let _ = peer.send(message.clone()).await;

}

}

}

}

2. Smart Routing

Not every message needs to be told to everyone - intelligently select receivers based on message type:

// Select optimal propagation path based on message type

async fn smart_gossip_routing(

event: &ClusterEvent,

cluster_client: &ClusterClient

) -> Vec<String> {

match event {

ClusterEvent::NewNode(_) => {

// New node message: tell everyone

cluster_client.peers.read().await.keys().cloned().collect()

}

ClusterEvent::ActorAdded { .. } |

ClusterEvent::ActorRemoved { .. } => {

// Actor changes: only tell nodes that care about this type of Actor

// This can be optimized based on actual business logic

cluster_client.peers.read().await.keys().cloned().collect()

}

}

}

Complete Usage Example

Finally, here’s a complete usage example showing how this system works in practice:

use tokio;

#[tokio::main]

async fn main() {

// 1. Create local node info

let node_info = NodeInfo {

id: "node-awesome".to_string(),

addr: "ws://192.168.1.100:8080".to_string(),

actors: vec!["printer".to_string()],

};

// 2. Initialize cluster map and client

let cluster_map = Arc::new(RwLock::new(HashMap::new()));

let cluster_client = ClusterClient::new().await;

// 3. Connect to seed node (a known neighbor)

if let Ok(_) = cluster_client.connect("ws://192.168.1.10:8080").await {

println!("✅ Successfully connected to seed node");

// 4. Self-introduction

broadcast_new_node(node_info.clone(), &cluster_client).await;

// 5. Start message listening

tokio::spawn(async move {

let mut receiver = cluster_client.message_receiver().await;

while let Some(message) = receiver.recv().await {

if let Ok(event) = serde_json::from_str::<ClusterEvent>(&message) {

handle_gossip(event, &cluster_map).await;

}

}

});

// 6. Register new Actor and broadcast

tokio::time::sleep(Duration::from_secs(2)).await;

register_actor_with_gossip(

"calculator".to_string(),

Box::new(CalculatorActor::new()),

&actor_registry,

&cluster_client,

&node_info.id

).await;

println!("🎉 Node {} successfully joined cluster", node_info.id);

}

// Keep running

tokio::signal::ctrl_c().await.unwrap();

println!("👋 Node graceful exit");

}

How Well Does It Actually Work?

After deploying this system for 3 months, our distributed Actor cluster had a qualitative leap:

- New node join time: From 5 minutes (manual config) down to 2 seconds

- Message propagation latency: Cluster-wide info sync latency stable under 5ms

- Fault recovery time: After single node failure, other nodes detect within 30 seconds

- Ops costs: Basically no need to manually maintain config files anymore

The best part is that scaling now feels like playing with Legos - start new machines, they automatically find the cluster and integrate.

Comparison with Other Solutions

This “village gossip” approach actually borrows from many mature systems:

| System | Similarities | Our Improvements |

|---|---|---|

| Erlang/Elixir | Inter-node auto-discovery | More lightweight, focuses on Actor systems |

| Akka Cluster | Actor location awareness | Simpler implementation, easier to customize |

| Consul | Service registration/discovery | Designed specifically for Actors, better performance |

| Redis Sentinel | Failover | Decentralized, no single point of failure |

Final Thoughts

Honestly, implementing this system made me want to give up multiple times. Especially when dealing with network partitions and message deduplication - it was truly mind-numbing.

But seeing 5 machines naturally chatting, sharing information, and helping each other like villagers - that sense of achievement is indescribable. This isn’t just a technical victory, but a tribute to nature’s collaborative patterns.

Village gossip mechanisms have been running for thousands of years - why can’t our distributed systems learn from them?

If you found this article helpful, feel free to follow my column. I’ll continue sharing more Rust distributed system real-world experience. Next time we’ll talk about adding “health checks” and “automatic failover” features to this system.

After all, villages don’t just gossip - they can also identify whose lights went out and who needs help, right?

The villagers’ story continues… Next episode preview: “When Villagers Get Sick: Health Monitoring & Self-Healing Mechanisms for Rust Actor Clusters”