Rust Cluster Commands: Managing Distributed Systems Like Conducting an Orchestra

Table of Contents

- When Your Distributed System Needs a Conductor

- Defining Your Conductor’s Language

- Message Classification: Actor Mail vs System Commands

- Building the Command Processing Center

- Implementing the Command Processor

- Sending Commands: Pick Up Your Baton

- Hands-on Practice: Making the Cluster Obey Commands

- Lessons from Experience: Pitfalls I’ve Encountered in Real Projects

- Extension Ideas: Making Your Baton More Powerful

- Summary

When Your Distributed System Needs a Conductor

Imagine you’ve just assembled a symphony orchestra with multiple musicians - each node is like a musician playing their own instrument (processing actor messages). But what if you suddenly need to pause the entire orchestra, or just restart the violin section?

This is exactly what cluster control commands solve. After building a WebSocket-based distributed actor system, we need a way to command the entire cluster, just like a conductor waving their baton.

Defining Your Conductor’s Language

First, we need to define a set of control commands, like the conductor’s gesture language:

#[derive(Debug, Serialize, Deserialize)]

#[serde(tag = "type")]

pub enum ControlMessage {

Ping { from: String },

Shutdown,

Restart,

ScaleUp(u32), // Example: scale to N workers

}

These commands aren’t for specific actors, but go directly to the node system itself. Just like you wouldn’t tell a violinist how to play each note, but coordinate the entire orchestra through the conductor.

Message Classification: Actor Mail vs System Commands

In a real mail system, you distinguish between personal letters and company announcements. Similarly, in our cluster we need to distinguish between different types of messages:

#[derive(Debug, Serialize, Deserialize)]

#[serde(tag = "kind")]

pub enum ClusterPacket {

Actor(NetworkMessage), // Messages between actors

Control(ControlMessage), // System control commands

Event(ClusterEvent), // Cluster events (from Part 6)

}

The benefit of this design is that the receiver can immediately see what type of message it is, just like seeing an “Urgent” mark on an envelope.

Building the Command Processing Center

In each node’s WebSocket server, we need a central dispatch room to handle different types of messages:

match serde_json::from_str::<ClusterPacket>(&msg_str) {

Ok(ClusterPacket::Actor(actor_msg)) => {

// Send to local actor - this is dialogue between actors

...

}

Ok(ClusterPacket::Control(control_msg)) => {

handle_control(control_msg).await; // System commands need special handling

}

Ok(ClusterPacket::Event(event)) => {

handle_gossip(event, &cluster_map).await; // Cluster gossip messages

}

Err(err) => {

println!("❌ Failed to parse cluster message: {err}");

}

}

Implementing the Command Processor

Now let’s implement the specific command handling logic. This is like giving each node a smart assistant that knows how to execute various instructions:

async fn handle_control(msg: ControlMessage) {

match msg {

ControlMessage::Ping { from } => {

println!("📡 Ping received from {from}");

// Like responding to "testing, testing, 1-2-3"

}

ControlMessage::Shutdown => {

println!("🛑 Shutdown received. Exiting...");

std::process::exit(0); // Graceful curtain call

}

ControlMessage::Restart => {

println!("🔄 Restart triggered! (Not implemented yet)");

// Like restarting performance after intermission

}

ControlMessage::ScaleUp(n) => {

println!("📈 Scaling up to {n} workers!");

// Recruiting more musicians to join the orchestra

}

}

}

Sending Commands: Pick Up Your Baton

With command definitions and handling logic in place, now we need to be able to send these commands. Add sending functionality to ClusterClient:

impl ClusterClient {

pub async fn send_control(&self, node_id: &str, cmd: ControlMessage) {

if let Some(peer) = self.peers.read().unwrap().get(node_id) {

let packet = ClusterPacket::Control(cmd);

let json = serde_json::to_string(&packet).unwrap();

peer.send(json).await; // Precise direction to specific musician

} else {

println!("🚫 Node {node_id} not connected");

// Like pointing the baton at an empty seat

}

}

pub async fn broadcast_control(&self, cmd: ControlMessage) {

let packet = ClusterPacket::Control(cmd);

let json = serde_json::to_string(&packet).unwrap();

for peer in self.peers.read().unwrap().values() {

peer.send(json.clone()).await; // Commanding the entire hall

}

}

}

Hands-on Practice: Making the Cluster Obey Commands

Now let’s actually use these control commands:

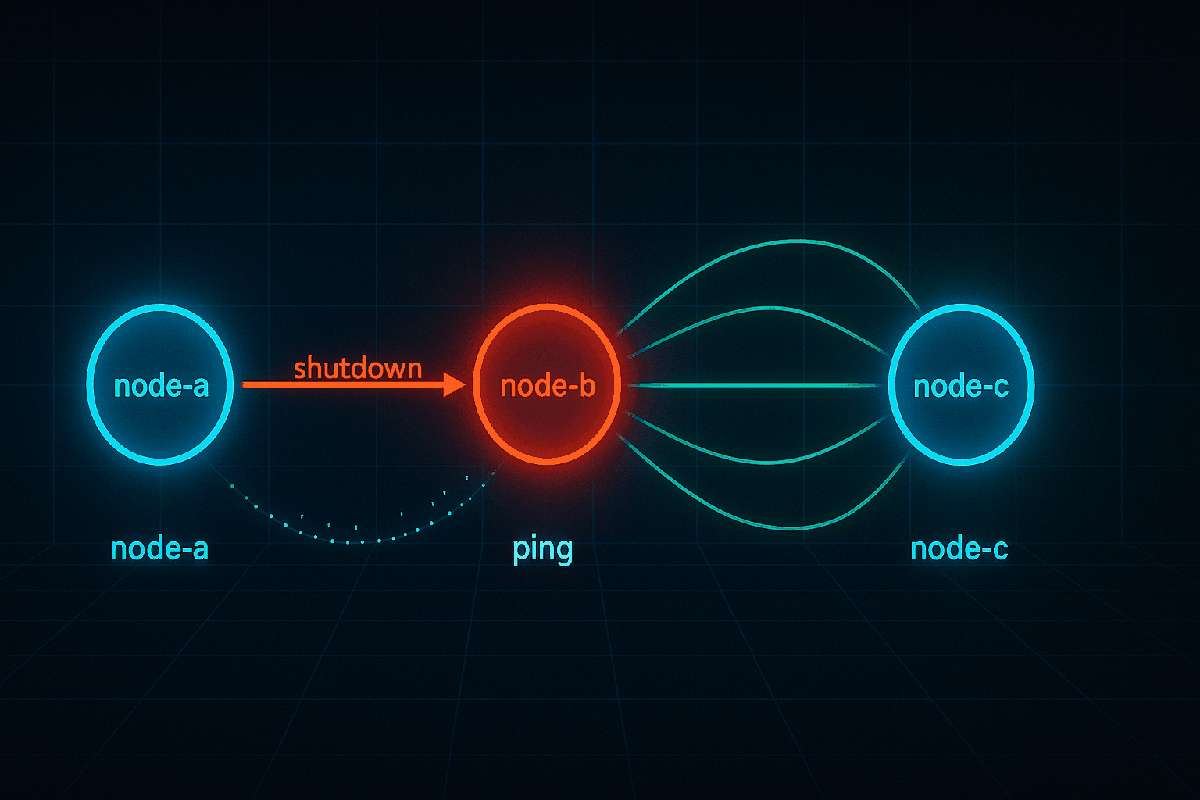

Gracefully shutdown node B:

cluster.send_control("node-b", ControlMessage::Shutdown).await;

Send ping to all nodes:

cluster.broadcast_control(ControlMessage::Ping { from: "node-a".into() }).await;

You’ll see output like this:

📡 Ping received from node-a

🛑 Shutdown received. Exiting...

Lessons from Experience: Pitfalls I’ve Encountered in Real Projects

Access control is crucial: Not every node should be able to send shutdown commands. In real projects, I recommend adding signature verification, like concert hall security only letting in the real conductor.

Graceful shutdown is key: Directly calling

std::process::exit(0)is simple, but in production environments it’s better to complete current tasks and release resources first, like musicians finishing the current measure before putting down their instruments.Consider adding confirmation mechanisms: Important commands should wait for confirmation replies, like a conductor expecting musicians to respond with “command received” glances.

Extension Ideas: Making Your Baton More Powerful

- Heartbeat detection: Regularly send

Heartbeatmessages for health checks, like a conductor periodically scanning each section - Status queries: Add

VersionInfo,Status, orMetricsmessages to get node status - Dynamic balancing: Implement

"rebalance"command to redistribute sharded work - Configuration synchronization: Broadcast configuration changes through control channels

Summary

By implementing cluster control commands, we’ve added a powerful management layer to our distributed system. This is like giving a symphony orchestra a professional conductor who can precisely control each musician’s performance.

| Feature | Description |

|---|---|

ControlMessage | Node system commands (shutdown, ping, etc.) |

ClusterPacket | Message wrapper (Actor, Control, Event) |

send_control() | Targeted control messages |

broadcast_control() | Broadcast to all connected nodes |

handle_control() | Execute node-level operations |

Remember, good system design is like good music - it requires both excellent performance from each part and overall coordination. Now pick up your baton and start managing your distributed symphony orchestra!

If you found this article helpful, feel free to follow my column where I’ll continue sharing more practical experience with Rust and distributed systems.